I attended the SAP for Utilities conference in San Antonio last week. I gave the closing keynote (which I’ll write-up in another post).

I was interested though by the fact that two themes recurred in all the opening keynotes.

1. All of the opening keynoters made mention of Social Media – this was a huge relief because my closing talk was due to be on Social Media, so the speakers were setting the stage nicely! And

2. Mobility was talked up big-time by the speakers

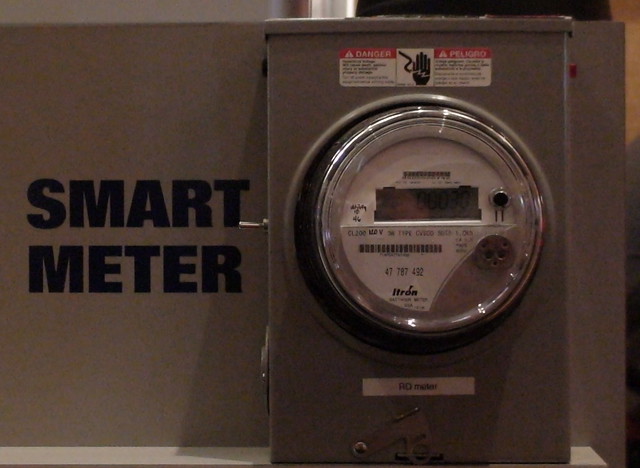

I had expected some talk of mobility, along with HANA, Smart Grids Cloud and Analytics – the usual gamut of topics at these events and they were indeed all addressed, but there was a definite emphasis on mobility over all other topics.

It is understandable – with the advent of tablets and smartphones, computing is going mobile, no question about it. I think it was Cisco’s CTO Paul De Martini who dropped the stat that 200,000 new android devices are being activated daily.

This impacts utility companies on two fronts:

1. On the customer front, utilities can now drop the idea of in-home energy management devices and, instead, assume the vast majority of their customers has access to a smartphone or tablet and

2. On the employee front, utilities have lots of mobile workers – the ability to connect them easily back into corporate applications will be game changing.

In my talk on social media strategies for utilities – I suggested that utilities equip every truck-roll with a smartphone. That way, when they get to site to repair a downed line (or whatever), they can take a quick video of the damage, the people working on-site, and in the voice-over give a rough estimated time of recovery. This can be uploaded to YouTube at the touch of a button on the phone and so, call center operators, and social media departments can direct enquiries to the video – immediately helping diffuse the frustration of having power cut.

Programs like this can even be pro-active and the customer service benefits of rolling this out should not be under-estimated.

Utilities are entering a new, more challenging era. Mobility solutions (especially when combined with social media) will be a powerful tool to help them meet these challenges.

Full disclosure – SAP is a GreenMonk client and paid travel and expenses for me to attend the conference.

Photo credit Tom Raftery