IBM has announced the completion of the acquisition The Weather Company’s B2B, mobile and cloud-based web-properties, weather.com, Weather Underground, The Weather Company brand and WSI, its global business-to-business brand.

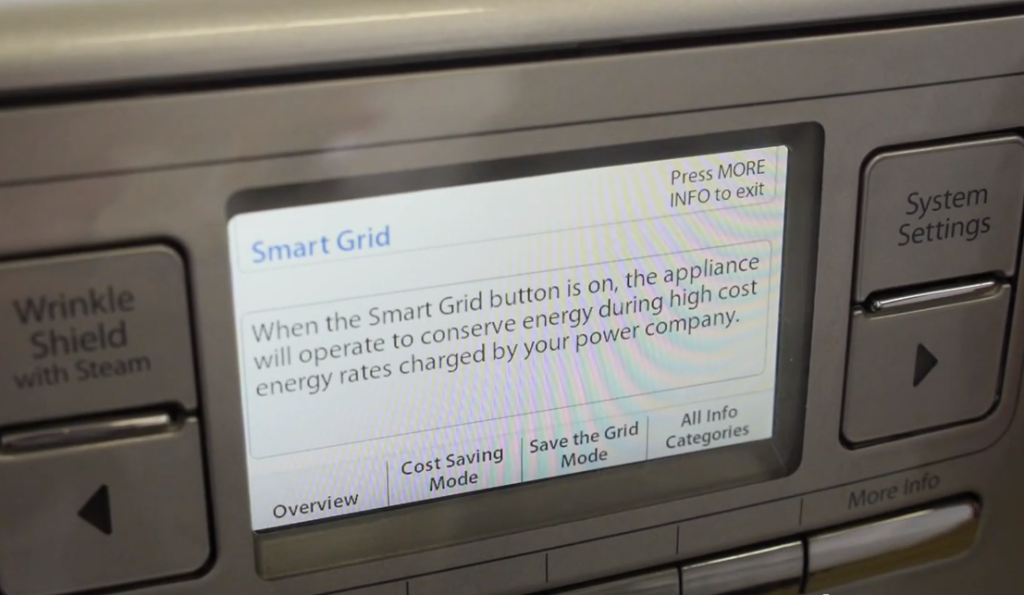

At first blush this may not seem like an obvious pairing, but the Weather Company’s products are not just their free apps for your smartphone, they have specialised products for the media industry, the insurance industry, energy and utilities, government, and even retail. All of these verticals would be traditional IBM customers.

Then when you factor in that the Weather Company’s cloud platform takes in over 100 Gbytes per day of information from 2.2 billion weather forecast locations and produces over 300 Gbytes of added products for its customers, it quickly becomes obvious that the Weather Company’s platform is highly optimised for Big Data, and the internet of Things.

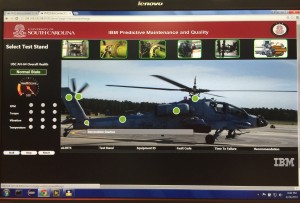

This platform will now serve as a backbone for IBM’s Watson IoT.

Watson you will remember, is IBM’s natural language processing and machine learning platform which famously took on and beat two former champions on the quiz show Jeopardy. Since then, IBM have opened up APIs to Watson, to allow developers add cognitive computing features to their apps, and more recently IBM announced Watson IoT Cloud “to extend the power of cognitive computing to the billions of connected devices, sensors and systems that comprise the IoT”.

Given Watson’s relentless moves to cloud and IoT, this acquisition starts to make a lot of sense.

IBM further announced that it will use its network of cloud data centres to expand Weather.com into five new markets including China, India, Brazil, Mexico and Japan, “with the goal of increasing its global user base by hundreds of millions over the next three years”.

With Watson’s deep learning abilities, and all that weather data, one wonders if IBM will be in a position to help scientists researching climate change. At the very least it will help the rest of us be prepared for its consequences.

New developments in AI and deep learning are being announced virtually weekly now by Microsoft, Google and Facebook, amongst others. This is a space which it is safe to say, will completely transform how we interact with computers and data.