If you were going to build one of the world’s largest data centre’s you wouldn’t intuitively site it in the middle of the Nevada desert but that’s where Switch sited their SuperNAPs campus. I went on a tour of the data centre recently when in Las Vegas for IBM’s Pulse 2012 event.

The data centre is impressive. And I’ve been in a lot of data centre’s (I’ve even co-founded and been part of the design team of one in Ireland).

The first thing which strikes you when visiting the SuperNAP is just how seriously they take their security. They have outlined their various security layers in some detail on their website but nothing prepares you for the reality of it. As a simple example, throughout our entire guided tour of the data centre floor space we were followed by one of Switch’s armed security officers!

The data centre itself is just over 400,000 sq ft in size with plenty of room within the campus to build out two or three more similarly sized data centres should the need arise. And although the data centre is one of the world’s largest, at 1,500 Watts per square foot it is also quite dense as well. This facilitates racks of 25kW and during the tour we were shown cages containing 40 x 25kW racks which were being handled with apparent ease by Switch’s custom cooling infrastructure.

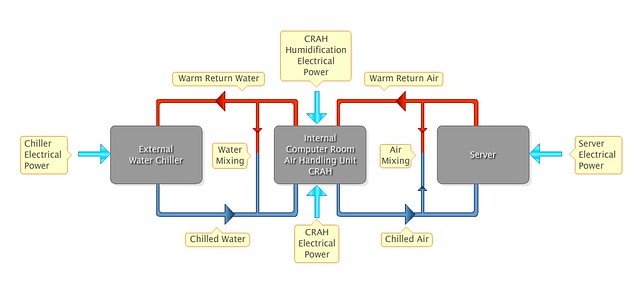

Because SuperNAP wanted to build out a large scale dense data centre, they had to custom design their own cooling infrastructure. They use a hot aisle containment system with the cold air coming in from overhead and the hot air drawn out through the top of the contained aisles.

The first immediate implication of this is that there are no raised floors required in this facility. It also means that walking around the data centre, you are walking in the data centre’s cold aisle. And as part of the design of the facility, the t-scif’s (thermal seperate compartment in facility – heat containment structures) are where the contained hot aisle’s air is extracted and the external TSC600 quad process chillers systems generate the cold air externally for delivery to the data floor. This form of design means that there is no need for any water piping within the data room which is a nice feature.

Through an accident of history (involving Enron!) the SuperNAP is arguably the best connected data centre in the world, a fact they can use to the advantage of their clients when negotiating connectivity pricing. And consequently, connectivity in the SuperNAP is some of the cheapest available.

As a result of all this, the vast majority of enterprise cloud computing providers have a base in the SuperNAP. As is the 56 petabyte ebay hadoop cluster – yes, 56 petabyte!

Given that I have regularly bemoaned cloud computing’s increasing energy and carbon footprint on this blog, you won’t be surprised to know that one of my first questions to Switch was about their energy provider, NV Energy.

According to NV Energy’s 2010 Sustainability Report [PDF] coal makes up 21% of the generation mix and gas accounts for another 63.3%. While 84% electricity generation from fossil fuels sounds high, the 21% figure for coal is low by US standards, as the graph on the right details.

Still, it is a long way off the 100% of electricity from renewables that Verne Global’s new data centre has.

Apart from the power generation profile, which in fairness to Switch, is outside their control (and could be considerably worse) the SuperNAP is, by far, the most impressive data centre I have ever been in.

Photo Credit Switch

Follow @TomRaftery